|

|

Songyao Jiang

I am a Senior Applied Scientist at Amazon Artificial General Intelligence (AGI) Foundations team,

specializing in multimodal foundation models. I lead multimodal joint reinforcement learning efforts that improve cross-modal understanding and reasoning across speech, images, video, and text—helping bring Amazon Nova 1.0 and 2.0 from research to launch.

My current research interests lie in generative models and computer vision, including multimodal LLMs, vision-language models, and vision encoders.

I received my Ph.D. in Computer Engineering at Northeastern University,

SmileLab

advised by Dr. Yun (Raymond) Fu. I received my masters degree at the University of Michigan

and my bachelors at The Hong Kong Polytechnic University.

I was the team leader when we won

the CVPR 2021 Challenge on Large Scale Signer Independent Isolated Sign Language Recognition .

Our team (smilelab2021) ranked 1st in both RGB and RGB-D tracks.

I am also an innovative entrepreneur. I paticipated in NSF I-Corps and NSF I-Corps Sites at NEU as Enterpreneurial Leads. I was core founding members of two AI startup companies: Giaran, Inc. and AInnovation Labs, Inc.

Giaran was acquired by Shiseido Americas in Nov. 2017 [News].

Beyond academics, I am a backpacking & hiking enthusiasts and a skilled astronomy & landscape photographer. Here is my Little Gallery.

Email /

CV /

Google Scholar /

GitHub /

LinkedIn

|

|

News

- [12/2025] Our team's new Nova-family MLLMs are announced at AWS re:Invent. [Link]

- [11/2025] One paper is submitted to CVPR 2026.

- [02/2025] One paper is published at Analytical Chemistry. [Link]

- [01/2025] One patent is granted. [News] [Link]

- [11/2024] Our team's Nova multi-modal foundation models are launched at AWS re:Invent. [Link]

- [08/2024] One paper is accepted to AMLC 2024.

- [11/2023] Join Amazon AGI (Artificial General Intelligence) Foundation Team. [Link]

- [04/2023] One patent application is filed.

- [11/2022] One paper is accepted to AAAI 2023. [Link]

- [06/2022] Join Amazon Lab126 as Applied Scientist.

- [05/2022] Receive NSF I-Corps Grant. [link]

- [04/2022] Successfully defend my Ph.D. dissertation.

- [04/2022] Participate in NSF I-Corps Spring 2022 as Entrepreneurial Lead.

- [12/2021] Be awarded NVIDIA CCS Best Student Paper.

- [11/2021] One oral paper is accepted to FG 2021.

- [10/2021] One patent is published: WO/2021/163103.

[link]

- [07/2021] One paper is accepted to ACM MM 2021.

- [06/2021] Our team ranked the 4th place in CVPR21 Challenge on Agriculture Vision.

[code]

[link]

[CodaLab]

- [05/2021] One paper is accepted to CVPR 2021 Workshops.

- [04/2021] Our team won the championships in both RGB & RGB-D tracks of CVPR21 Challenge on Large Scale Signer Independent Isolated Sign Language Recognition.

[code]

[link]

[news]

- [01/2021] One paper is accepted to IEEE TIP.

- [12/2020] One paper is accepted to Nature: Commun. Biol.

- [11/2020] One patent is published: WO/2020/232069.

[link]

- [10/2020] One patent is granted: US Patent 10,825,219.

[link]

- [03/2020] One paper is accepted by FG 2020.

- [02/2020] One paper is accepted to Lab on a Chip.

- [01/2020] One patent is published: US/2020/0015575.

[link]

- [03/2019] One paper is accepted to FG 2019.

- [01/2019] Receive GapFund360 Award.

[news]

- …

|

|

Selected Research

I'm interested in generative models, computer vision, machine learning, and computational photography.

My current research includes multi-modal large language models, vision encoders, pose estimation, and sign language recognition.

|

|

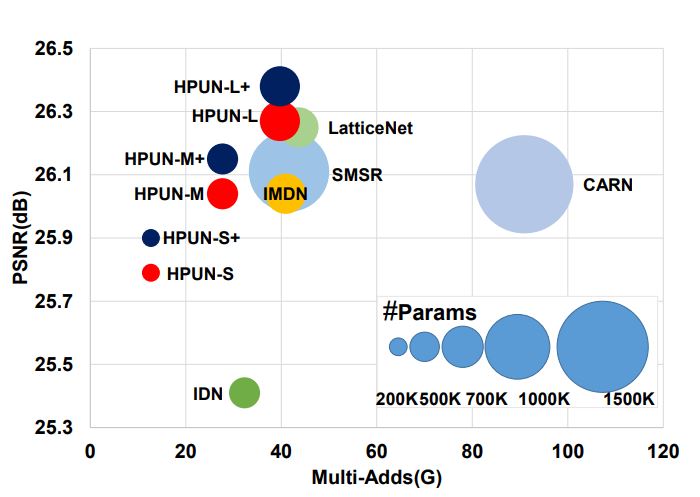

Hybrid Pixel-Unshuffled Network for Lightweight Image Super-Resolution

Bin Sun, Yulun Zhang, Songyao Jiang, and Yun Fu

AAAI, 2023

Paper /

GitHub /

Demo

Downsampling features for multi-resolution fusion is an efficient and effective way to improve the performance of visual recognition.

Still, it is counter-intuitive in the SR task, which needs to project a low-resolution input to high-resolution. In this paper,

we propose a novel Hybrid Pixel-Unshuffled Network (HPUN) by introducing an efficient and effective downsampling module into the SR task.

|

|

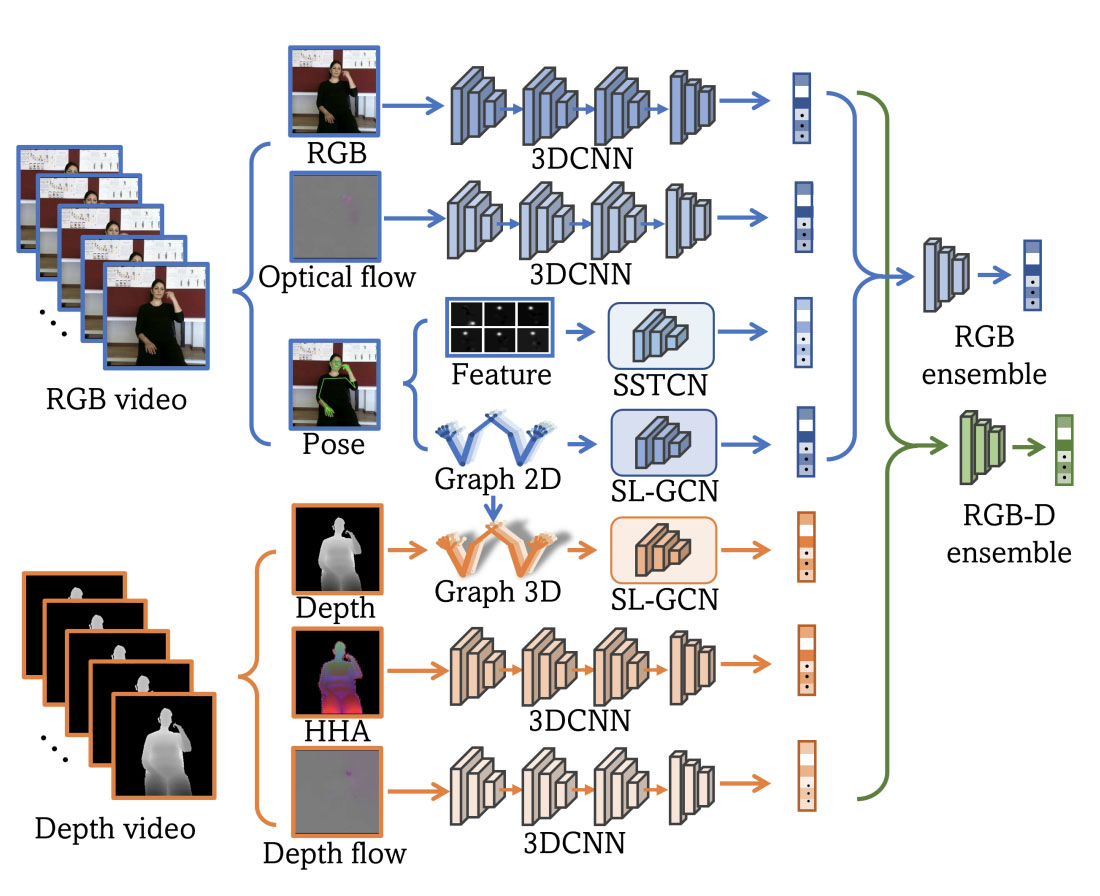

Sign Language Recognition via Skeleton Aware Multi-modal Ensemble (SAM-SLR-v2)

Songyao Jiang, Bin Sun, Lichen Wang, Yue Bai, Kunpeng Li, and Yun Fu

ArXiv Preprint, 2021

Paper /

GitHub

This paper is an extension of our previous work SAM-SLR that won the sign language recognition challenge in CVPR 2021.

Our major improvements include introducing a new Keypoint3D modality that improves the ensemble accuracy in the RGB-D scenario.

proposing a Global Ensemble Model to automatically learn multi-modal ensemble,

and providing extensive experiments on three major SLR datasets.

Our approach achieves the state-of-the-art performance with significant margins.

|

|

Skeleton Aware Multi-modal Sign Language Recognition (SAM-SLR)

Songyao Jiang, Bin Sun, Lichen Wang, Yue Bai, Kunpeng Li, and Yun Fu

Championships winner of the CVPR21 Challenge on Sign Language Recognition

CVPR21 Workshop, 2021

Paper /

GitHub /

YouTube /

RGB Leaderboard /

RGB-D Leaderboard

We proposed a skeleton-aware multi-modal sign language recognition framework (SAM-SLR) to capture information from multiple modalities

and assemble them together to further boost the performance. Our team ranked 1st in both RGB and RGB-D tracks in the challenge.

|

|

Multi-label Semantic Segmentation for Multi-modal Agricultural Pattern Recognition

Songyao Jiang, Bin Sun, and Yun Fu

Ranked 4th in the CVPR21 Challenge on the Agriculture-Vision, 2021

GitHub /

Leaderboard

We developed a framework consisting a GCN based and a DeepLabv3 based multi-label semantic segmentation models to recognize the agricultural patterns (e.g. cloud shadow, double plant, standing water and weed cluster) in multi-modal RGB and NIR aerospace images. Our final result achieved 0.507 mIoU.

|

|

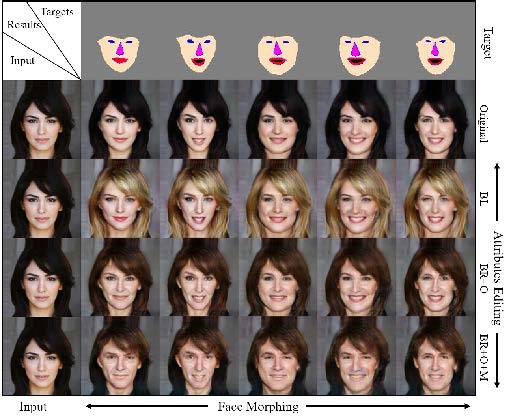

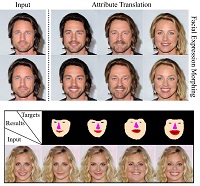

Geometrically Editable Face Image Translation With Adversarial Networks

Songyao Jiang, Zhiqiang Tao, and Yun Fu

IEEE Transactions on Image Processing (TIP), 2021

Paper /

GitHub

We formulate the image translation problem as multi-domain mappings in both geometric and attribute

directions and propose a novel Geometrically Editable Generative Adversarial Networks (GEGAN) model to learn such mappings of geometric editable translations.

|

|

Spatially Constrained GAN for Face and Fashion Synthesis

Songyao Jiang, Hongfu Liu, Yue Wu and Yun Fu

IEEE International Conference on Automatic Face & Gesture Recognition (FG)2021

NVIDIA CCS Best Student Paper Award

Paper /

GitHub /

Award /

Website

Image generation has raised tremendous attention

in both academic and industrial areas, especially

for criminal portrait and fashion design.

We propose a novel Spatially Constrained Generative Adversarial Network

, which decouples the spatial constraints from

the latent vector and makes them feasible as

additional controllable signals.

|

|

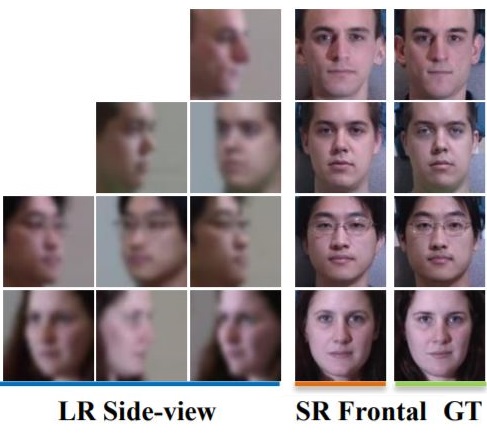

SuperFront: From Low-resolution to High-resolution Frontal Face Synthesis

Yu Yin, Joseph P. Robinson, Songyao Jiang, Yue Bai, Can Qin and Yun Fu

ACM Multimedia (ACMMM), 2021

Paper / GitHub

Existing face frontalization methods tend to focus on samples with variation in pose, but with the assumption data

is high in quality. We propose a generative adversarial network (GAN) -based model to generate high-quality,

identity preserving frontal faces from one or multiple low-resolution (LR) faces with extreme poses.

|

|

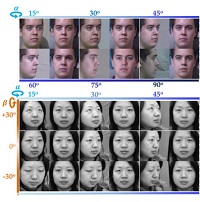

Dual-Attention GAN for Large-Pose Face Frontalization

Yu Yin, Songyao Jiang, Joseph P. Robinson, and Yun Fu

IEEE International Conference on Automatic Face & Gesture Recognition (FG), 2020

Paper /

GitHub

Face frontalization provides an effective and efficient way for face data augmentation and further improves the

face recognition performance in extreme pose scenario. We present a novel

Dual-Attention Generative Adversarial Network (DA-GAN) for

photo-realistic face frontalization by capturing both contextual

dependencies and local consistency during GAN training.

|

|

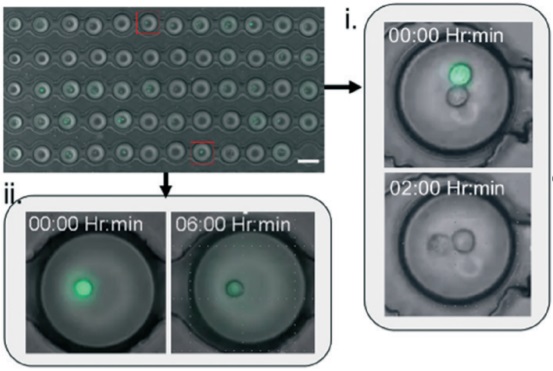

Machine Learning-aided Quantification of Antibody-based Cancer Immunotherapy by Natural Killer Cells in Microfluidic Droplets

Saheli Sarkar, Wenjing Kang, Songyao Jiang, Kunpeng Li, Somak Ray, Ed Luther, Alexander R Ivanov, Yun Fu, and Tania Konry

Lab on a Chip, 2020

Natural killer (NK) cells have emerged as an effective alternative option to T cell-based immunotherapies,

particularly against liquid (hematologic) tumors. This paper describes a microfluidic

droplet-based cytotoxicity assay for quantitative comparison of immunotherapeutic NK-92 cell interaction with various types of target cells.

Machine learning algorithms were developed to assess the dynamics of individual effector-target cell pair conjugation and target death in droplets in a semi-automated manner.

|

|

Segmentation Guided Image-to-Image Translation with Adversarial Networks

Songyao Jiang, Zhiqiang Tao and Yun Fu

IEEE International Conference on Automatic Face & Gesture Recognition (FG), 2019

Paper /

GitHub /

ArXiv

Recently image-to-image translation methods neglect to utilize higher-level and instance-specific

information to guide the training process, leading to a great

deal of unrealistic generated images of low quality. We propose a novel Segmentation

Guided Generative Adversarial Networks, which

leverages semantic segmentation to further boost the generation

performance and provide spatial mapping.

|

|

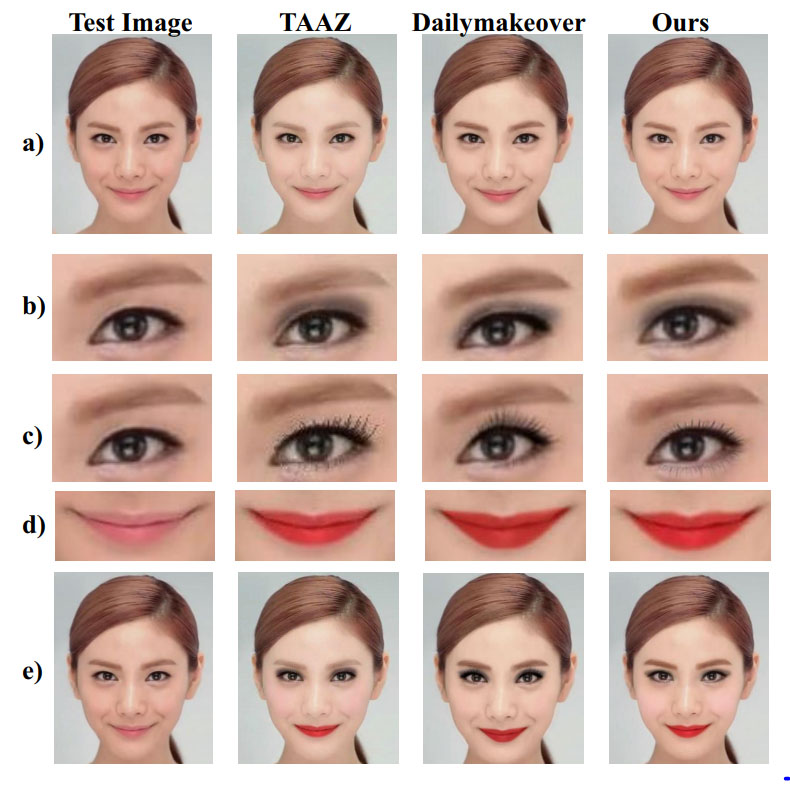

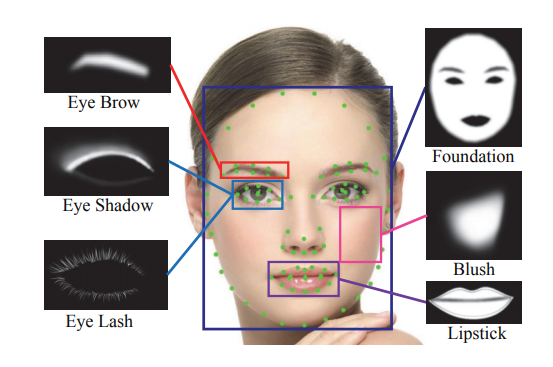

Rule-Based Facial Makeup Recommendation System

Taleb Alashkar, Songyao Jiang and Yun Fu

IEEE International Conference on Automatic Face & Gesture Recognition (FG), 2017

An automatic and smart facial makeup recommendation

and synthesis system is proposed. An automatic facial

makeup synthesis system is aslo developed to apply the recommended style

on the facial image as well. A new dataset with 961 different females photos

collected and labeled.

|

|

Examples-Rules Guided Deep Neural Network for Makeup Recommendation

Taleb Alashkar, Songyao Jiang, Shuyang Wang and Yun Fu

AAAI Conference on Artificial Intelligence (AAAI), 2017

We consider a fully automatic makeup recommendation system and propose a novel

examples-rules guided deep neural network approach. Makeup-related facial traits are classified into structured

coding. These facial traits are fed in- to examples-rules guided deep

neural recommendation model which makes use of the pairwise of Before-After images

and the makeup artist knowledge jointly. To visualize the recommended makeup

style, an automatic makeup synthesis system is developed as well.

|

Publications

- Q. Li, J. Zhou, S. Jiang, Y. Fu, and M. Su, “Single-Cell Array Enhanced Cell Damage Recognition Using Artificial Intelligence for Anticancer Drug Discovery,”

Analytical Chemistry, Vol. 97, Iss. 7, 2025.

- B. Sun, Y. Zhang, S. Jiang, and Y. Fu, “Hybrid Pixel-Unshuffled Network for Lightweight Image Super-Resolution,”

in Proceedings of AAAI Conference on Artificial Intelligence (AAAI), 2021.

- S. Jiang, B. Sun, L. Wang, Y. Bai, K. Li, and Y. Fu, “Sign Language Recognition via Skeleton-aware Multi-modal Ensemble,”

arXiv preprint arXiv:2110.06161, 2022.

- S. Jiang, B. Sun, L. Wang, Y. Bai, K. Li, and Y. Fu, “Skeleton Aware Multi-modal Sign Language Recognition,”

in Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2021.

- S. Jiang, Z. Tao, and Y. Fu, “Geometrically Editable Face Image Translation with Adversarial Networks,”

IEEE Transactions on Image Processing (TIP), 2021.

- S. Jiang, H. Liu, Y. Wu, and Y. Fu, “Spatially Constrained GAN for Face and Fashion Synthesis,”

in Proceedings of 16th IEEE International Conference on Automatic Face & Gesture Recognition (FG), 2021.

- Y. Yin, J. P. Robinson, S. Jiang, and Y. Fu, “SuperFront: From Low-resolution to High-resolution Frontal Face Synthesis,”

in Proceedings of ACM Multimedia (ACMMM), 2021.

- Y. Yin, S. Jiang, J. P. Robinson, and Y. Fu, “Dual-attention GAN for Large-pose Face Frontalization,”

in Proceedings of 15th IEEE International Conference on Automatic Face & Gesture Recognition (FG), 2020.

- S. Sarkar, W. Kang, S. Jiang, K. Li, S. Ray, E. Luther, ... and T. Konry, “Machine learning-aided quantification of antibody-based cancer immunotherapy by natural killer cells in microfluidic droplets,”

Lab on a Chip, 20(13), pp. 2317-2327, 2020.

- Z. Hong, T. Sun, S. Jiang, K. Li, Y. Fu, H. Xu, J. Zhang, Y. Liu, Q. Ye, and H. Cang, “Harnessing Deep Learning to Overcome Photo-toxicity for Live-cell Imaging,”

Under Review, 2020.

- S. Jiang, Z. Tao, and Y. Fu, “Segmentation Guided Image-to-Image Translation with Adversarial Networks,”

in Proceedings of 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG), , 2019.

- T. Alashkar, S. Jiang, and Y. Fu, “Rule-Based Facial Makeup Recommendation System,”

in Proceedings of 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG), , 2017.

- T. Alashkar, S. Jiang, S. Wang, and Y. Fu, “Examples-Rules Guided Deep Neural Network for Makeup Recommendation,”

in Proceedings of AAAI Conference on Artificial Intelligence (AAAI), 2017.

- S. Jiang, and T. Kato, “Dynamic Modelling of Combined Cycle Power Plant for Load Frequency Control with Large Penetration of Renewable Energy,”

in 7th JUACEP Workshop, 2014.

|

Patents

- S. Gao, J. Thomas, J. Zhang, S. Jiang, J. Luo, “Stylus Input Compensation System”.

US Patent 12,293,040.

- Y. Fu, S. Jiang, B. Sun, “Light-Weight Pose Estimation Network with Multi-Scale Heatmap Fusion”.

US Patent 12,205,317.

- Y. Fu, S. Jiang, “Segmentation Guided Image Generation with Adversarial Networks”.

US Patent 10,825,219.

- Y. Fu, S. Jiang, “Video 2D Multi-person Pose Estimation using Multi-frame Refinement and Optimization”.

WIPO Patent App. No.: WO 2020/232069.

- Y. Fu, S. Wang, S. Lee, S. Jiang, B. Sun, H. Mao, K. H. E. Cheung, “Systems and Methods for Virtual Facial

Makeup Removal and Simulation, Fast Facial Detection and Landmark Tracking, Reduction in … ”.

US Patent App. No: 16/584,310.

|

Honors & Awards

- NSF I-Corps Grant, NSF 2022.

- NVIDIA CCS Best Student Paper Award, FG 2021.

- Champions of CVPR21 Challenge on Large-Scale Signer-independent Isolated Sign Language Recognition (RGB & RGB-D tracks), 2021.

- 4th Rank in CVPR21 Challenge on Agriculture-Vision (supervised track), 2021.

- PhD Network Travel Grant, Northeastern University, USA, 2019.

- GapFund360 Award, Northeastern University, USA, 2018.

- NSF I-Corps Grant, National Science Foundation, 2016.

- JUACEP Research Award, Nagoya University, Japan, 2014.

- JASSO Scholarship, Nagoya University, Japan, 2014

- Outstanding Scholarship, Hong Kong Polytechnic University, Hong Kong, 2010, 2011, 2012, 2013.

|

Academic Service

Conference PC Member and Reviewer:

- International Conference on Computer Vision (ICCV)

- European Conference on Computer Vision (ECCV)

- International Joint Conferences on Artificial Intelligence (IJCAI)

- IEEE International Conference on Automatic Face & Gesture Recognition (FG)

- IEEE International Conference on Data Mining (ICDM)

- IEEE International Conference on Multimedia Information Processing and Retrieval (MIPR)

Journal Reviewer:

- IEEE Transactions on Image Processing (TIP)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- IEEE Transaction on Multimedia (TMM)

- Journal of Visual Communication and Image Representation (JVCI)

- The Vision Computer (TVCJ)

- IET Image Processing

- Journal of Electronic Imaging (JEI)

Workshop Reviewer:

- IEEE International Workshop on Analysis and Modeling of Faces and Gestures Workshops (AMFG)

|

This website is generated using source code from Jon Barron.

|

|

About

News

Research

Publications

Awards

Service

About

News

Research

Publications

Awards

Service